Market Overview of Cloud Server Providers

The cloud server provider market is a dynamic and rapidly evolving landscape, characterized by intense competition among a few dominant players and a growing number of niche providers. This market is driven by the increasing adoption of cloud computing across various industries and the continuous innovation in cloud technologies. Understanding the market share, geographic distribution, and prevailing trends is crucial for businesses seeking to leverage cloud services effectively.

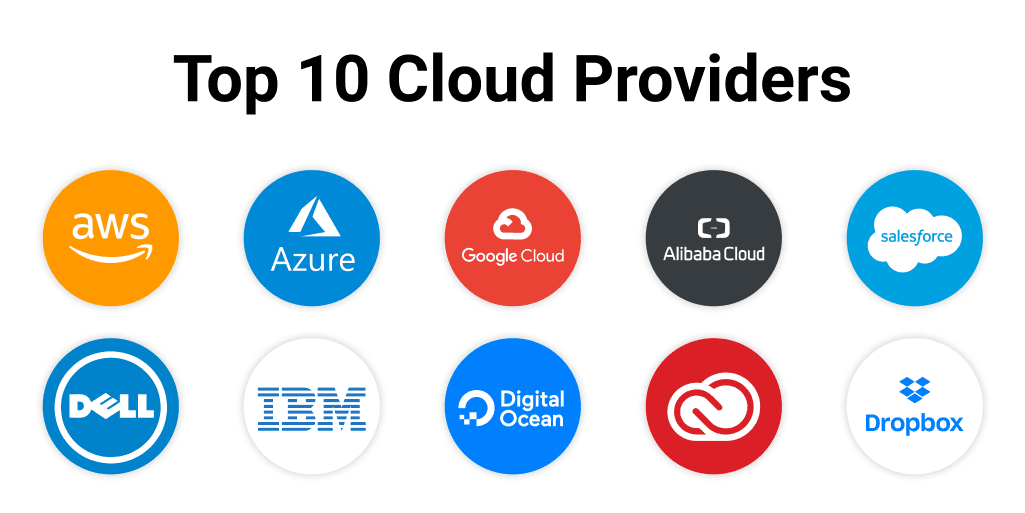

Market Share of Top Cloud Server Providers

The cloud server provider market is largely dominated by a handful of hyperscalers. While precise market share figures fluctuate depending on the source and methodology, Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) consistently hold the largest shares, collectively accounting for well over half of the global market. Other significant players include Alibaba Cloud and Oracle Cloud Infrastructure, though their market share is considerably smaller than the top three. The competitive landscape is characterized by ongoing innovation and strategic acquisitions, leading to shifts in market positioning over time. For instance, AWS maintains a significant lead, benefiting from its early entry and established ecosystem. Microsoft Azure has experienced strong growth, leveraging its existing enterprise customer base and strong integration with its other software offerings. Google Cloud Platform, while a strong competitor, has focused on specific niches such as data analytics and machine learning.

Geographic Distribution of Cloud Server Providers

Cloud server providers maintain a global presence, with data centers strategically located across various regions to ensure low latency and compliance with local regulations. North America and Europe currently represent the largest markets for cloud services, driven by high technological adoption rates and a robust digital economy. However, significant growth is observed in Asia-Pacific, particularly in countries like China, India, and Japan, fueled by increasing digitalization and economic expansion. The geographic distribution of cloud services is further influenced by factors such as government regulations, data sovereignty concerns, and the availability of skilled workforce. The ongoing expansion of cloud infrastructure into emerging markets indicates a shift towards a more geographically diverse landscape.

Current Trends Shaping the Cloud Server Provider Market

Several key trends are shaping the future of the cloud server provider market. The rise of edge computing, which involves processing data closer to the source, is enabling faster response times and reduced latency for applications requiring real-time processing. This trend necessitates a decentralized cloud infrastructure and is driving innovation in edge computing solutions. Furthermore, the increasing adoption of serverless computing, where developers only pay for the compute time they consume, is simplifying application development and deployment. Another significant trend is the growing focus on sustainability and environmental responsibility within the cloud industry. Cloud providers are increasingly investing in renewable energy sources and implementing energy-efficient technologies to reduce their carbon footprint. Finally, the continued development of artificial intelligence (AI) and machine learning (ML) is driving demand for cloud-based AI/ML platforms and services, leading to further innovation and competition in this space. For example, the increasing use of AI in healthcare necessitates robust cloud infrastructure to handle large datasets and complex algorithms, further driving the demand for cloud services.

Pricing Models and Cost Structures

Understanding the pricing models and cost structures of cloud server providers is crucial for effective budget management and resource allocation. Different providers offer various pricing options, each with its own advantages and disadvantages, making it essential to carefully evaluate your needs before committing to a specific plan. This section will explore the common pricing models and factors influencing overall cloud server costs.

Major cloud providers typically offer a range of pricing models designed to cater to different usage patterns and budgetary constraints. The most common models include pay-as-you-go, reserved instances, and spot instances. Pay-as-you-go, also known as on-demand pricing, charges you for the resources consumed, offering flexibility but potentially leading to higher costs for consistent usage. Reserved instances provide discounts for committing to a specific amount of resources for a defined period. Spot instances offer the lowest prices but come with the risk of instances being terminated with short notice. Understanding these differences is key to optimizing your cloud spending.

Pricing Model Comparison

The following table compares the pricing of similar server configurations across three major cloud providers: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Note that these are illustrative examples and actual prices may vary based on region, instance type, and other factors. It’s crucial to consult each provider’s pricing calculator for the most up-to-date and accurate cost estimations.

| Provider | Pricing Model | Features | Cost Example (per month, estimated) |

|---|---|---|---|

| Amazon Web Services (AWS) | On-Demand (m5.large) | 2 vCPUs, 8 GiB memory, 80 GB storage | $150 – $200 |

| Microsoft Azure | Pay-As-You-Go (Standard_B2s) | 2 vCPUs, 4 GiB memory, 128 GB storage | $120 – $180 |

| Google Cloud Platform (GCP) | Sustained Use Discount (n1-standard-2) | 2 vCPUs, 7.5 GiB memory, 100 GB storage | $100 – $150 |

Factors Influencing Cloud Server Costs

Several factors significantly impact the overall cost of utilizing cloud servers. Careful consideration of these factors can lead to substantial cost savings.

These factors include:

- Compute resources: The number of virtual CPUs (vCPUs), memory (RAM), and storage space directly influence costs. Higher specifications naturally lead to higher expenses.

- Operating system: Different operating systems (e.g., Windows Server vs. Linux) have varying licensing costs, affecting the overall price.

- Data transfer: Transferring large amounts of data between your cloud server and other locations (e.g., internet, other cloud services) incurs charges based on data volume and distance.

- Storage type: Choosing between different storage types (e.g., SSD vs. HDD) impacts costs, with SSD generally being more expensive but offering faster performance.

- Region: Server location influences pricing; costs can vary significantly across different geographical regions.

- Pricing model: As discussed earlier, selecting the appropriate pricing model (on-demand, reserved instances, spot instances) significantly affects overall expenses. Choosing a model that aligns with your usage patterns is vital.

- Database usage: If your application uses a database, the costs associated with database storage, compute, and operations can be substantial. Efficient database design and management are essential.

- Network traffic: High network traffic, particularly outbound traffic, can lead to significant costs. Optimizing network configuration and minimizing unnecessary data transfer are important cost-saving measures.

Service Level Agreements (SLAs) and Reliability

Choosing a cloud provider involves careful consideration of their commitment to service reliability. Service Level Agreements (SLAs) are crucial contracts outlining the provider’s guarantees regarding uptime, performance, and support. Understanding these agreements and the metrics used to measure them is essential for businesses relying on cloud infrastructure.

Cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) each offer robust SLAs, but the specifics vary. These differences can significantly impact the overall cost and risk associated with using their services. Key metrics for evaluating reliability include uptime percentages, recovery time objectives (RTOs), and recovery point objectives (RPOs).

Comparison of SLAs from AWS, Azure, and GCP

AWS, Azure, and GCP all provide comprehensive SLAs, but their specifics differ across services. For instance, the uptime guarantee for compute services (e.g., virtual machines) is typically very high, often exceeding 99.9%, but specific guarantees vary depending on the chosen service and region. Furthermore, the compensation mechanisms for SLA breaches can also differ, with some providers offering service credits while others may offer technical support extensions. A detailed comparison requires reviewing each provider’s specific SLA documentation for each service. Generally, all three providers aim for very high availability, but the nuances of their SLAs necessitate careful review based on individual requirements.

Key Metrics for Measuring Cloud Server Reliability and Uptime

Several key metrics are used to assess the reliability and uptime of cloud servers. Uptime percentage, representing the percentage of time a service is operational, is a fundamental metric. Recovery Time Objective (RTO) defines the maximum acceptable downtime after an outage, while Recovery Point Objective (RPO) specifies the maximum acceptable data loss in case of a failure. Mean Time Between Failures (MTBF) measures the average time between system failures, while Mean Time To Recovery (MTTR) indicates the average time it takes to restore service after a failure. These metrics, along with others like latency and error rates, provide a comprehensive picture of a cloud provider’s reliability.

Examples of Service Disruption Handling by Cloud Providers

Providers typically employ various strategies to handle service disruptions and outages. These include proactive monitoring and preventative maintenance to minimize the likelihood of outages, along with robust redundancy and failover mechanisms to ensure business continuity. For example, if a data center experiences a power failure, AWS might automatically failover to a redundant data center in a different geographical location. Azure and GCP use similar techniques, including geographically distributed data centers and load balancing to distribute traffic and prevent single points of failure. In the event of a service disruption, providers typically provide updates and communication to their customers through various channels such as service health dashboards, email alerts, and social media. Compensation for service credits is often offered based on the severity and duration of the outage, as defined in their SLAs.

Security Features and Data Protection

Cloud server providers prioritize robust security measures to protect their clients’ data and infrastructure. The level of security offered varies between providers, and understanding these features is crucial for choosing the right service for your needs. This section details the security features of leading providers, their compliance certifications, and a comparison of their offerings.

Data Encryption

Data encryption is a fundamental security feature, safeguarding data both in transit and at rest. Leading cloud providers employ various encryption methods, including AES-256 encryption for data at rest and TLS/SSL for data in transit. This ensures that even if unauthorized access occurs, the data remains unreadable without the correct decryption key. Many providers also offer client-side encryption options, allowing users to manage their own encryption keys for enhanced control. The specific encryption methods and key management practices differ across providers, and users should review the detailed security documentation provided by each provider to understand their implementation.

Access Control and Identity Management

Robust access control mechanisms are essential to prevent unauthorized access to cloud resources. Providers typically offer granular access control features using role-based access control (RBAC) and identity and access management (IAM) systems. RBAC allows administrators to assign specific permissions to users or groups based on their roles, limiting access only to necessary resources. IAM systems manage user identities, authentication, and authorization, ensuring only authorized personnel can access sensitive information. Multi-factor authentication (MFA) is also commonly offered as an additional layer of security to prevent unauthorized logins.

Intrusion Detection and Prevention

Cloud providers employ various intrusion detection and prevention systems to monitor their infrastructure for malicious activity. These systems continuously analyze network traffic and system logs for suspicious patterns, alerting administrators to potential security breaches. Many providers offer integrated security information and event management (SIEM) tools to centralize security monitoring and incident response. Proactive measures, such as regular security audits and penetration testing, are also undertaken to identify and address vulnerabilities before they can be exploited.

Compliance Certifications

Major cloud providers actively pursue industry-recognized compliance certifications to demonstrate their commitment to security and data protection. These certifications, such as ISO 27001, SOC 2, HIPAA, and PCI DSS, attest to their adherence to specific security standards and best practices. The specific certifications held by each provider vary depending on their target markets and services offered. Checking for relevant certifications is a crucial step in assessing the security posture of a cloud provider.

Comparison of Security Features

The following table compares the security features offered by three leading cloud providers: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Note that this is not an exhaustive list, and the specific features and implementations may evolve over time.

| Security Feature | Provider Offering |

|---|---|

| Data Encryption (at rest and in transit) | AWS: AES-256, TLS/SSL; Azure: AES-256, TLS/SSL; GCP: AES-256, TLS/SSL |

| Access Control (IAM/RBAC) | AWS: IAM, RBAC; Azure: Azure Active Directory, RBAC; GCP: IAM, RBAC |

| Intrusion Detection/Prevention | AWS: GuardDuty, Inspector; Azure: Azure Security Center; GCP: Cloud Security Command Center |

| Compliance Certifications (examples) | AWS: ISO 27001, SOC 2, HIPAA, PCI DSS; Azure: ISO 27001, SOC 2, HIPAA, PCI DSS; GCP: ISO 27001, SOC 2, HIPAA, PCI DSS |

Scalability and Flexibility of Cloud Servers

Cloud servers offer unparalleled scalability and flexibility, differentiating them significantly from on-premise solutions. This advantage stems from the inherent architecture of cloud computing, allowing for dynamic resource allocation and adjustment based on real-time demand. Unlike on-premise setups where scaling requires significant upfront investment in hardware and potentially lengthy lead times, cloud servers enable businesses to rapidly increase or decrease computing resources as needed, optimizing costs and ensuring application performance.

Cloud servers provide scalability through the ability to easily add or remove computing resources such as CPU, memory, and storage. This contrasts sharply with on-premise solutions where scaling typically involves purchasing and installing new hardware, a process that can be time-consuming and expensive. Flexibility is further enhanced by the diverse range of services offered by cloud providers, including different operating systems, programming languages, and database options, enabling businesses to adapt their infrastructure to changing needs and technological advancements.

Scaling a Cloud Server Instance

Imagine a rapidly growing e-commerce company anticipating a significant surge in traffic during a holiday sale. Using an on-premise solution, they would likely need to invest in additional servers well in advance, potentially leading to underutilized resources before the sale and potentially insufficient capacity during peak demand. With a cloud server, however, they can easily scale their instance up by adding more CPU cores, RAM, and storage capacity in anticipation of the increased traffic. Following the sale, they can just as easily scale down, reducing their resource consumption and associated costs. This dynamic adjustment ensures optimal performance during peak demand while avoiding wasted resources during periods of lower activity. For instance, they might increase the number of virtual machines in their cloud deployment to handle the expected increase in website visits, and then reduce the number once the sale concludes. The speed and ease of this process is a key benefit of cloud computing.

Advantages of Cloud Servers for Scaling Applications

The advantages of leveraging cloud servers for scaling applications are substantial. Firstly, it offers cost-effectiveness. Businesses only pay for the resources they consume, avoiding the upfront capital expenditure associated with on-premise solutions and the risk of over-provisioning. Secondly, it provides agility and speed. Scaling up or down can be achieved within minutes, allowing businesses to respond quickly to changing demands and market opportunities. Thirdly, it enhances efficiency. Cloud providers manage the underlying infrastructure, freeing up internal IT teams to focus on core business functions. Finally, it offers increased reliability and availability through redundancy and geographically distributed data centers.

Disadvantages of Cloud Servers for Scaling Applications

While cloud servers offer many advantages, it’s important to acknowledge potential drawbacks. Vendor lock-in can occur, making it challenging to switch providers. Security concerns, while addressed by robust security features offered by cloud providers, still require careful consideration and implementation of appropriate security measures. Furthermore, reliance on a third-party provider introduces a degree of dependency and potential for disruptions beyond the control of the business. Finally, unexpected costs can arise if scaling isn’t carefully managed and monitored, especially with unpredictable spikes in demand. Thorough planning and understanding of the pricing model are essential to mitigate this risk.

Types of Cloud Server Deployments

Choosing the right cloud server deployment model is crucial for optimizing performance, security, and cost-effectiveness. The best choice depends heavily on an organization’s specific needs, security requirements, and budget. Three primary deployment models exist: public, private, and hybrid clouds. Each offers distinct advantages and disadvantages.

Public Cloud Deployments

Public cloud deployments leverage shared resources across multiple tenants. Providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer this service. Resources are dynamically allocated and billed based on consumption. This model is highly scalable and cost-effective for businesses with fluctuating workloads or those needing quick access to resources.

- Scalability and Cost-Effectiveness: Public clouds offer unparalleled scalability, allowing businesses to easily adjust resources based on demand. This pay-as-you-go model reduces upfront capital expenditure.

- Ease of Use and Management: Providers handle infrastructure management, allowing businesses to focus on their core applications and services.

- Broad Range of Services: Public cloud providers offer a vast ecosystem of services, including databases, analytics, and machine learning tools.

However, concerns about data security and vendor lock-in are common. A company relying solely on a public cloud provider may face challenges if that provider experiences outages or if they decide to switch providers.

Private Cloud Deployments

Private cloud deployments involve dedicated resources for a single organization. These resources can be hosted on-premises or by a third-party provider in a dedicated environment. This model offers enhanced security and control but often comes at a higher cost.

- Enhanced Security and Control: Private clouds offer greater control over data security and compliance, making them suitable for organizations with strict regulatory requirements.

- Customization and Flexibility: Organizations can tailor the infrastructure to their specific needs and integrate it seamlessly with existing systems.

- Predictable Costs (potentially): While potentially more expensive upfront, private clouds can offer more predictable costs in the long run, especially for consistent workloads.

The significant drawback is the high initial investment and ongoing maintenance costs. Managing and maintaining the infrastructure requires specialized expertise and resources, which can be a substantial burden for smaller organizations.

Hybrid Cloud Deployments

Hybrid cloud deployments combine elements of both public and private clouds. This approach allows organizations to leverage the benefits of both models, using public clouds for scalability and cost-effectiveness and private clouds for sensitive data and applications.

- Flexibility and Scalability: Hybrid clouds provide flexibility to scale resources based on demand, leveraging public cloud resources during peak times and relying on private cloud for consistent workloads.

- Enhanced Security and Compliance: Sensitive data can be stored and processed in a secure private cloud environment, while less sensitive data can be handled in the more cost-effective public cloud.

- Disaster Recovery and Business Continuity: Hybrid clouds can be used to create robust disaster recovery plans, with data replicated across both public and private clouds.

The complexity of managing a hybrid cloud environment is a significant challenge. Integrating different systems and ensuring seamless data flow requires careful planning and expertise.

Integration with Other Cloud Services

Cloud servers rarely operate in isolation. Their true power lies in their ability to seamlessly integrate with a wide range of other cloud services, creating robust and scalable applications. This integration allows for efficient data management, enhanced security, and improved application performance. Understanding these integrations is crucial for designing and deploying effective cloud-based solutions.

Cloud servers integrate with other cloud services through well-defined APIs (Application Programming Interfaces) and standardized protocols. These APIs provide a structured way for different services to communicate and exchange data. For example, a cloud server can directly access a cloud-based database to store and retrieve application data, or it can utilize a cloud storage service to manage files and other assets. Similarly, integration with cloud networking services enables efficient communication between different parts of an application or between different cloud-based systems. These integrations often leverage technologies like RESTful APIs, message queues, and event-driven architectures to ensure reliable and efficient communication.

Examples of Improved Application Performance and Efficiency Through Integration

Effective integration significantly improves application performance and efficiency. For instance, using a managed database service like Amazon RDS or Google Cloud SQL eliminates the need for the server administrator to manage database infrastructure, freeing up resources and reducing operational overhead. This allows developers to focus on application logic rather than database administration. Furthermore, using a content delivery network (CDN) like Cloudflare or Akamai, integrated with a cloud server, drastically improves application responsiveness for users geographically dispersed across the globe. By caching static content closer to end-users, the CDN reduces latency and improves the overall user experience. Another example involves integrating a serverless function platform (like AWS Lambda or Google Cloud Functions) with a cloud server. This allows for automatic scaling of specific tasks, optimizing resource utilization and cost-effectiveness.

Hypothetical Architecture Illustrating Cloud Service Integration

Imagine an e-commerce application deployed on a cloud server. This server handles user requests, processes orders, and manages product information. To improve performance and scalability, the application integrates with several other cloud services:

A. Cloud Database (e.g., Amazon RDS): The server uses a relational database to store product information, user accounts, and order details. This database is managed by the cloud provider, ensuring high availability and scalability. The server interacts with the database via its API, retrieving and updating information as needed.

B. Cloud Storage (e.g., Amazon S3): Product images and other large files are stored in cloud storage. The server uses the storage service’s API to upload, download, and manage these files. This reduces the load on the server and ensures high availability of static assets.

C. Cloud CDN (e.g., Cloudflare): Static content, such as images and CSS files, is cached on a CDN. This significantly reduces latency for users accessing the application from different geographic locations, improving performance and user experience.

D. Cloud Load Balancer (e.g., AWS Elastic Load Balancing): A load balancer distributes incoming traffic across multiple instances of the cloud server, ensuring high availability and preventing overload. This increases the application’s resilience and scalability.

E. Cloud Monitoring and Logging (e.g., CloudWatch, Stackdriver): The application integrates with monitoring and logging services to track its performance, identify errors, and troubleshoot issues. This provides valuable insights into the application’s health and helps ensure its smooth operation.

This architecture demonstrates how a cloud server, by integrating with various other cloud services, creates a highly available, scalable, and efficient e-commerce platform. The individual services handle specific tasks, freeing up the server to focus on its core function, ultimately leading to a more robust and performant application.

Monitoring and Management Tools

Effective monitoring and management are crucial for optimizing cloud server performance, ensuring high availability, and minimizing costs. Major cloud providers offer comprehensive toolsets designed to provide granular insights into resource utilization, application performance, and overall system health. These tools empower administrators to proactively address potential issues, scale resources dynamically, and maintain optimal operational efficiency.

Cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) each offer a suite of integrated monitoring and management tools. While they share some common functionalities, such as resource monitoring and logging, they also possess unique features tailored to their respective ecosystems and services. A comparative analysis reveals both similarities and key differences in their capabilities and user experience.

Cloud Provider Monitoring and Management Tool Comparison

The following table summarizes the key monitoring and management tools offered by AWS, Azure, and GCP, highlighting their core features and functionalities. Note that the features listed represent a selection of the most commonly used tools and may not encompass the full breadth of offerings from each provider.

| Feature | AWS | Azure | GCP |

|---|---|---|---|

| Resource Monitoring | Amazon CloudWatch | Azure Monitor | Google Cloud Monitoring |

| Logging and Auditing | Amazon CloudTrail, Amazon CloudWatch Logs | Azure Activity Log, Azure Monitor Logs | Cloud Logging, Cloud Audit Logs |

| Performance Analysis | Amazon CloudWatch Application Insights, X-Ray | Azure Application Insights, Azure Profiler | Cloud Profiler, Cloud Trace |

| Alerting and Notifications | Amazon CloudWatch Alarms, SNS | Azure Monitor Alerts, Azure Logic Apps | Cloud Monitoring Alerting, Pub/Sub |

| Management Consoles | AWS Management Console | Azure portal | Google Cloud Console |

| Automation and Orchestration | AWS Systems Manager, AWS CloudFormation | Azure Automation, Azure Resource Manager | Deployment Manager, Cloud Functions |

Optimizing Cloud Server Performance with Monitoring Tools

Effective utilization of monitoring and management tools can significantly enhance cloud server performance. By leveraging these tools, administrators can proactively identify and resolve performance bottlenecks, optimize resource allocation, and ensure high availability.

For example, using Amazon CloudWatch, an administrator can monitor CPU utilization, memory usage, and network traffic of their EC2 instances. If CPU utilization consistently exceeds a predefined threshold, CloudWatch can trigger an alarm, notifying the administrator of a potential performance issue. This allows for timely intervention, such as scaling up the instance size or optimizing application code, preventing performance degradation and ensuring a smooth user experience. Similarly, Azure Monitor allows for similar monitoring and alerting, providing detailed performance metrics across various Azure services. Google Cloud Monitoring offers comparable functionality, enabling proactive identification and resolution of performance bottlenecks within GCP environments.

Furthermore, tools like AWS X-Ray and Azure Application Insights provide application-level performance insights, allowing developers to pinpoint slow database queries or inefficient code segments. This granular level of visibility enables developers to optimize their applications for better performance and scalability. These insights are crucial for ensuring responsiveness and a positive user experience.

Disaster Recovery and Business Continuity

Cloud server providers offer robust solutions to ensure business continuity and facilitate swift recovery from unexpected events. Their services are designed to minimize downtime and data loss, safeguarding critical business operations. This is achieved through a combination of infrastructure redundancy, data replication, and advanced recovery strategies.

Cloud server providers support disaster recovery and business continuity by offering a range of services and features designed to minimize the impact of disruptive events. These services are typically built on highly available and geographically dispersed infrastructure, allowing for rapid failover and recovery in case of outages or disasters. This approach ensures that businesses can continue operating even when faced with unforeseen circumstances such as natural disasters, cyberattacks, or hardware failures.

Disaster Recovery Strategies

Several disaster recovery strategies are available through cloud providers, each with its own strengths and weaknesses. The choice of strategy depends on factors such as the criticality of the applications, the recovery time objective (RTO), and the recovery point objective (RPO). A well-defined disaster recovery plan, incorporating a suitable strategy, is essential for minimizing business disruption.

Geographic Redundancy

Geographic redundancy, also known as geo-replication, involves replicating data and applications across multiple geographically separated data centers. If a disaster affects one location, the system automatically fails over to a backup location, ensuring minimal downtime. This strategy is particularly effective for organizations with stringent RTO and RPO requirements. For example, a financial institution might utilize geo-replication to ensure continuous access to critical financial systems, even in the event of a regional power outage or natural disaster affecting one of their data centers.

Backup and Restore

Regular backups of data and applications are crucial for disaster recovery. Cloud providers offer various backup solutions, including automated backups, incremental backups, and offsite storage. These backups can be restored to a new server or virtual machine quickly in case of data loss or system failure. A retail company, for instance, might use automated daily backups of its sales data to ensure that it can recover lost transactions in case of a server crash or ransomware attack. The ability to restore data from a recent backup minimizes data loss and allows for rapid recovery.

High Availability

High availability solutions are designed to ensure that applications and services remain operational even in the event of hardware or software failures. This typically involves using redundant components, load balancing, and automated failover mechanisms. A web hosting company, for example, might use a load balancer to distribute traffic across multiple web servers. If one server fails, the load balancer automatically redirects traffic to the remaining servers, ensuring that the website remains accessible to users.

Cloud-Based Disaster Recovery as a Service (DRaaS)

DRaaS solutions provide a comprehensive set of tools and services for disaster recovery. These solutions typically include backup and recovery, replication, failover, and failback capabilities. Using DRaaS, a company can test its disaster recovery plan regularly and ensure that its systems can be recovered quickly and efficiently in the event of a disaster. This allows for a cost-effective approach to disaster recovery, eliminating the need for companies to invest in and maintain their own on-site disaster recovery infrastructure. The provider manages the underlying infrastructure, allowing the business to focus on its core competencies.

Open Source vs. Proprietary Cloud Solutions

The choice between open-source and proprietary cloud server solutions significantly impacts cost, control, customization, and security. Understanding the strengths and weaknesses of each approach is crucial for businesses seeking to optimize their cloud infrastructure. This section will compare and contrast these two major categories, highlighting key considerations for informed decision-making.

Advantages and Disadvantages of Open-Source Cloud Solutions

Open-source cloud solutions offer several advantages, including greater flexibility and customization, cost savings through reduced licensing fees, and access to a large community for support and development. However, they may require more technical expertise for implementation and maintenance, and security can be a concern if not properly managed.

- Advantages: Increased flexibility and customization; lower costs due to the absence of licensing fees; strong community support and active development; greater transparency and control over the source code.

- Disadvantages: Requires higher technical expertise for setup and maintenance; potential security vulnerabilities if not properly configured and maintained; potentially slower updates compared to proprietary solutions; vendor support may be limited or community-driven.

Advantages and Disadvantages of Proprietary Cloud Solutions

Proprietary cloud solutions typically provide robust vendor support, readily available updates, and often better integration with other services within the vendor’s ecosystem. They often offer a more streamlined user experience. However, they typically come with higher costs due to licensing fees and can offer less flexibility and customization compared to open-source alternatives.

- Advantages: Comprehensive vendor support and readily available updates; typically user-friendly interfaces and streamlined management; often offer seamless integration with other vendor services; robust security features implemented by the vendor.

- Disadvantages: Higher costs due to licensing fees and potential vendor lock-in; limited flexibility and customization options; less transparency and control over the underlying infrastructure; reliance on the vendor for updates and support.

Key Considerations When Choosing Between Open-Source and Proprietary Options

Several factors should be considered when selecting between open-source and proprietary cloud solutions. These include budget constraints, the level of in-house technical expertise, the required level of customization, security requirements, and the importance of vendor support.

- Budget: Open-source solutions generally offer lower upfront costs, while proprietary solutions often involve ongoing licensing fees.

- Technical Expertise: Open-source solutions demand greater technical expertise for deployment and maintenance, while proprietary solutions are usually more user-friendly.

- Customization Needs: Open-source solutions offer greater flexibility for customization, whereas proprietary solutions may have limitations.

- Security Requirements: Both options can offer robust security, but the responsibility for security management differs. Open-source requires diligent configuration and maintenance, while proprietary solutions rely on the vendor’s security measures.

- Vendor Support: Proprietary solutions typically offer extensive vendor support, whereas open-source relies on community support, which may vary in quality and responsiveness.

Examples of Popular Open-Source and Proprietary Cloud Server Solutions

Numerous open-source and proprietary cloud solutions cater to diverse needs. OpenStack is a widely adopted open-source cloud computing platform, providing Infrastructure-as-a-Service (IaaS) capabilities. On the proprietary side, Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) are leading providers offering a broad range of IaaS, Platform-as-a-Service (PaaS), and Software-as-a-Service (SaaS) solutions. These solutions differ significantly in their features, pricing models, and support levels.

Future Trends in Cloud Server Technology

The cloud server market is in constant evolution, driven by the increasing demands for scalability, efficiency, and cost-effectiveness. Several emerging trends are reshaping the landscape, promising significant advancements in computing power and resource management. These trends not only affect how businesses utilize cloud services but also influence the very architecture and design of cloud server infrastructure.

The convergence of several technological advancements is leading to a paradigm shift in how we approach cloud computing. This shift is characterized by a move away from traditional virtual machine-based deployments towards more efficient and specialized models, focusing on optimizing resource utilization and reducing operational overhead. Two prominent examples of this shift are serverless computing and edge computing.

Serverless Computing

Serverless computing represents a significant departure from traditional cloud server models. Instead of managing servers directly, developers deploy code as functions that execute only when triggered by an event. This approach eliminates the need for continuous server provisioning and management, resulting in significant cost savings and improved scalability. Amazon Lambda, Google Cloud Functions, and Azure Functions are prominent examples of serverless platforms. The impact on the cloud server market is substantial, as it shifts the focus from managing infrastructure to managing code, empowering developers to focus on application logic rather than server administration. This trend leads to faster development cycles and reduced operational costs for businesses of all sizes.

Edge Computing

Edge computing addresses the limitations of centralized cloud computing by bringing computation and data storage closer to the source of data generation. This approach is particularly beneficial for applications requiring low latency, such as real-time video processing, autonomous vehicles, and IoT devices. By processing data at the edge, edge computing reduces the reliance on bandwidth-intensive data transfers to central cloud servers, resulting in faster response times and improved overall performance. Examples of edge computing implementations include deploying small-scale servers in retail stores to process point-of-sale transactions or using edge devices in industrial settings for real-time sensor data analysis. The impact on the cloud server market involves the creation of a distributed cloud infrastructure, requiring specialized hardware and software solutions designed for deployment in diverse environments. This leads to new opportunities for providers offering edge computing services and specialized hardware.

Challenges and Opportunities in Emerging Trends

The adoption of serverless and edge computing presents both challenges and opportunities. Understanding these aspects is crucial for navigating the evolving cloud server market.

The implementation of serverless and edge computing architectures requires specialized skills and expertise, leading to a demand for skilled professionals capable of designing, deploying, and managing these systems. This represents a significant opportunity for training and education providers focusing on cloud computing technologies. However, the lack of skilled personnel can also hinder the adoption of these technologies. Furthermore, ensuring security and data protection in decentralized edge computing environments presents a considerable challenge. Robust security measures are essential to mitigate the risks associated with distributed data processing and storage. The development of standardized security protocols and frameworks will be crucial for wider adoption. Finally, effective monitoring and management of serverless and edge deployments requires advanced tools and techniques. Developing tools that provide comprehensive visibility and control across distributed systems is a key opportunity for technology vendors. The increasing complexity of these systems necessitates sophisticated monitoring and management solutions.

User Queries

What is the difference between IaaS, PaaS, and SaaS?

IaaS (Infrastructure as a Service) provides virtualized computing resources like servers, storage, and networking. PaaS (Platform as a Service) offers a platform for developing and deploying applications, including tools and services. SaaS (Software as a Service) delivers software applications over the internet, eliminating the need for local installation.

How do I choose the right cloud server provider for my business?

Consider your budget, required resources (compute, storage, bandwidth), security needs, geographic location, and the provider’s reputation and support. Evaluate SLAs and pricing models carefully.

What are the security risks associated with using cloud servers?

Security risks include data breaches, unauthorized access, and denial-of-service attacks. Mitigate these risks by choosing a reputable provider with strong security features, implementing robust security practices, and regularly monitoring your systems.

What is serverless computing?

Serverless computing is a cloud execution model where the cloud provider dynamically manages the allocation of computing resources. Developers write and deploy code without managing servers.