Defining Cloud-Based Servers

Cloud-based servers represent a paradigm shift in computing infrastructure, offering businesses and individuals scalable, on-demand access to computing resources over the internet. Unlike traditional on-premise servers, cloud servers are managed and maintained by a third-party provider, eliminating the need for significant upfront investment in hardware and IT expertise. This allows for greater flexibility and efficiency in managing IT resources.

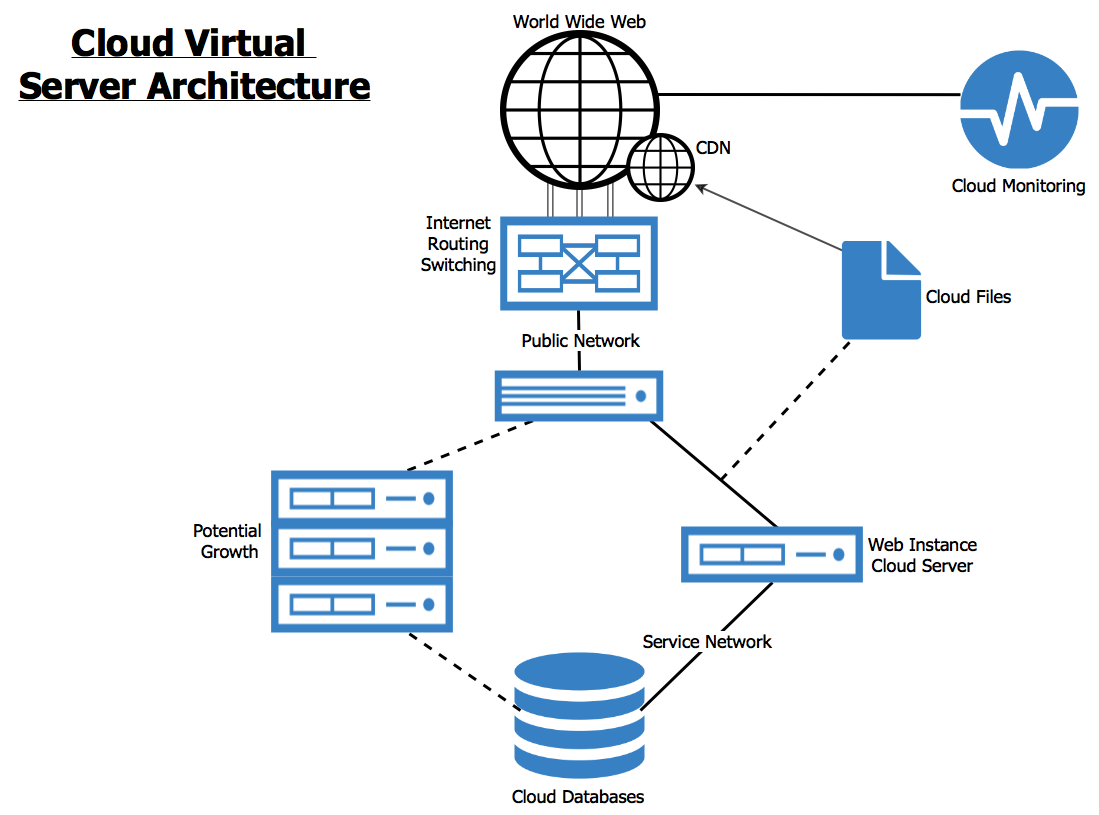

Cloud-based server architecture comprises several core components working in concert. These include virtual machines (VMs), which are software-based emulations of physical servers, providing isolated environments for applications and data. A robust network infrastructure, encompassing high-speed connections and data centers distributed geographically, ensures accessibility and redundancy. Storage solutions, ranging from object storage to block storage, provide scalable and reliable data management. Finally, a comprehensive management console allows users to monitor, configure, and control their server resources. The interplay of these components ensures the seamless delivery of computing power and data storage.

Cloud Server Models: Public, Private, and Hybrid

The cloud computing landscape offers several deployment models, each tailored to specific needs and security requirements. Public cloud servers, offered by providers like AWS, Azure, and Google Cloud, are shared resources accessible over the internet. This model offers cost-effectiveness and scalability but may raise concerns regarding data security and control. Private cloud servers, on the other hand, are dedicated resources hosted either on-premise or in a provider’s data center, offering enhanced security and control but at a higher cost. Hybrid cloud servers combine elements of both public and private clouds, leveraging the benefits of each model. For example, a company might use a private cloud for sensitive data and a public cloud for less critical applications, optimizing cost and security.

Comparison of Cloud Service Providers

Several major players dominate the cloud server market, each offering a unique set of features and services. Amazon Web Services (AWS) is a market leader, providing a comprehensive suite of services, including Elastic Compute Cloud (EC2) for virtual servers, Simple Storage Service (S3) for object storage, and Relational Database Service (RDS) for database management. Microsoft Azure offers comparable services, emphasizing integration with Microsoft products and technologies. Google Cloud Platform (GCP) focuses on data analytics and machine learning, providing powerful compute engines and robust data storage solutions. These providers, along with others like Alibaba Cloud and Oracle Cloud, offer a wide range of server options, from basic virtual machines to specialized high-performance computing instances, catering to diverse needs and budgets. The choice of provider often depends on factors such as existing infrastructure, specific application requirements, and budgetary considerations. Each provider’s pricing model also differs, impacting overall cost. For instance, AWS offers a pay-as-you-go model, while others may offer reserved instances or committed use discounts.

Security Considerations for Cloud Servers

The migration of data and applications to cloud-based servers offers numerous benefits, but it also introduces new security challenges. Understanding and mitigating these risks is crucial for maintaining data integrity, ensuring business continuity, and protecting sensitive information. A robust security strategy is not merely an add-on but an integral part of a successful cloud deployment.

Common Security Threats Associated with Cloud Servers

Cloud environments, while offering scalability and flexibility, are susceptible to a range of security threats. These threats can originate from both internal and external sources, targeting various aspects of the infrastructure and applications. Effective security measures must address these vulnerabilities proactively.

- Data breaches: Unauthorized access to sensitive data stored on cloud servers remains a significant concern. This can result from vulnerabilities in the server’s operating system, application flaws, or compromised user credentials.

- Denial-of-service (DoS) attacks: These attacks overwhelm cloud servers with traffic, rendering them inaccessible to legitimate users. Distributed denial-of-service (DDoS) attacks, originating from multiple sources, are particularly difficult to mitigate.

- Malware infections: Malicious software can infect cloud servers, potentially compromising data, stealing credentials, or disrupting operations. This can occur through vulnerabilities in applications or operating systems, or through phishing attacks targeting users.

- Insider threats: Employees with privileged access to cloud servers can pose a significant risk. Malicious or negligent actions by insiders can lead to data breaches or service disruptions.

- Misconfigurations: Incorrectly configured cloud servers can expose vulnerabilities. For example, improperly configured firewalls or access controls can allow unauthorized access.

Best Practices for Securing Cloud Server Environments

Implementing a multi-layered security approach is essential for protecting cloud servers. This involves a combination of technical controls, administrative procedures, and employee training.

- Strong passwords and multi-factor authentication (MFA): Implementing strong password policies and requiring MFA for all users significantly reduces the risk of unauthorized access.

- Regular security audits and penetration testing: Regular assessments identify vulnerabilities and weaknesses in the cloud infrastructure and applications. Penetration testing simulates real-world attacks to evaluate the effectiveness of security controls.

- Network segmentation and firewalls: Dividing the cloud network into smaller, isolated segments limits the impact of a security breach. Firewalls control network traffic, blocking unauthorized access.

- Data encryption: Encrypting data both in transit and at rest protects sensitive information from unauthorized access, even if a breach occurs.

- Regular software updates and patching: Keeping operating systems, applications, and other software components up-to-date patches vulnerabilities that attackers could exploit.

- Intrusion detection and prevention systems (IDPS): These systems monitor network traffic and system activity for malicious behavior, alerting administrators to potential threats and automatically blocking attacks.

- Access control lists (ACLs): ACLs define which users or groups have access to specific resources, ensuring that only authorized personnel can access sensitive data or applications.

- Vulnerability scanning: Regularly scanning for vulnerabilities helps identify and address security weaknesses before they can be exploited by attackers.

Comprehensive Security Plan for a Hypothetical Cloud-Based Server Deployment

A hypothetical deployment of a cloud-based e-commerce platform requires a robust security plan encompassing all aspects of the system. This plan should address data security, network security, application security, and user security.

This plan would incorporate the best practices Artikeld above, including implementing strong authentication, regular security audits, data encryption, network segmentation, and intrusion detection. Specific considerations would include secure coding practices for the e-commerce application, PCI DSS compliance for handling credit card information, and regular backups to ensure business continuity in the event of a disaster. Furthermore, a detailed incident response plan would Artikel procedures to be followed in case of a security breach, minimizing the impact and ensuring a swift recovery.

Cost Optimization of Cloud Servers

Managing cloud server costs effectively is crucial for maintaining a healthy budget and maximizing return on investment. Uncontrolled spending on cloud resources can quickly escalate, impacting profitability. This section explores various strategies for optimizing your cloud spending and ensuring you only pay for what you need.

Cost optimization involves a multi-faceted approach, encompassing careful planning, efficient resource utilization, and a deep understanding of the various pricing models offered by cloud providers. By implementing the strategies Artikeld below, businesses can significantly reduce their cloud infrastructure expenses without compromising performance or reliability.

Methods for Optimizing Cloud Server Costs

Effective cost optimization requires a proactive and strategic approach. This involves leveraging various tools and techniques provided by cloud providers, along with careful planning and monitoring of resource usage. Key methods include:

Choosing the right cloud provider is a fundamental step. Each provider (AWS, Azure, GCP) offers different pricing structures and services. A thorough comparison, considering your specific needs and usage patterns, is essential for identifying the most cost-effective option. Furthermore, utilizing reserved instances or committed use discounts can significantly reduce costs for predictable workloads. Regularly reviewing and adjusting your resource allocation, such as scaling down instances during periods of low demand, is crucial for optimizing spending. Finally, taking advantage of free tiers and free tools offered by cloud providers can contribute to substantial savings.

Strategies for Right-Sizing Cloud Server Instances

Right-sizing involves selecting the appropriate server instance type that meets your application’s requirements without overspending on unnecessary resources. Over-provisioning leads to wasted resources and higher costs. Under-provisioning, on the other hand, can result in performance bottlenecks and application instability.

A thorough analysis of your application’s resource needs—CPU, memory, storage, and network bandwidth—is the foundation of right-sizing. Utilize cloud provider tools that monitor resource utilization to identify instances where resources are consistently underutilized. Then, downsize to smaller instances, or consolidate multiple smaller instances into fewer, larger ones, depending on the workload characteristics. Regularly reviewing and adjusting instance sizes based on observed usage patterns is key to maintaining optimal performance and cost efficiency. Automated scaling can also dynamically adjust instance sizes based on real-time demand, preventing over-provisioning during periods of low activity.

Cost Analysis of Different Cloud Server Pricing Models

Cloud providers typically offer various pricing models, including pay-as-you-go, reserved instances, and spot instances. Each model has its own cost implications and is suitable for different usage patterns. Understanding these models is critical for making informed decisions and optimizing costs.

Pay-as-you-go is the most flexible model, charging only for the resources consumed. Reserved instances offer significant discounts for committing to a specific instance type and duration. Spot instances provide the lowest prices but come with the risk of interruption. The optimal choice depends on the predictability of your workload and your tolerance for risk.

| Feature | AWS (Example Pricing) | Azure (Example Pricing) | GCP (Example Pricing) |

|---|---|---|---|

| Standard Virtual Machine (e.g., 2 vCPU, 4GB RAM, 50GB Storage) – Hourly Rate | $0.08 | $0.07 | $0.06 |

| Reserved Instance Discount (1-year term) | Up to 75% | Up to 72% | Up to 60% |

| Spot Instance Discount | Up to 90% | Up to 90% | Up to 70% |

| Data Transfer Costs | Varies by region and data volume | Varies by region and data volume | Varies by region and data volume |

| Storage Costs | Varies by storage type and region | Varies by storage type and region | Varies by storage type and region |

Note: The pricing shown in the table is for illustrative purposes only and is subject to change. Actual pricing will vary depending on the specific instance type, region, and other factors. Consult the respective cloud provider’s pricing calculators for the most up-to-date and accurate pricing information.

Scalability and Performance of Cloud Servers

Cloud servers offer unparalleled scalability and performance advantages compared to traditional on-premise solutions. Their ability to rapidly adjust resources based on demand makes them ideal for businesses of all sizes, from startups experiencing rapid growth to established enterprises managing fluctuating workloads. This adaptability translates to cost-effectiveness and improved application responsiveness, ensuring a positive user experience.

Cloud servers handle scaling demands through various mechanisms. The most fundamental is the ability to easily add or remove computing resources, such as virtual machines (VMs), storage capacity, and network bandwidth, on demand. This elasticity allows businesses to scale their infrastructure up during peak usage periods and down during lulls, optimizing resource utilization and minimizing costs. This contrasts sharply with traditional servers, where scaling often requires significant upfront investment and lead times. Automated scaling features, often integrated into cloud platforms, further enhance this capability by automatically adjusting resources based on predefined metrics, such as CPU utilization or network traffic.

Techniques for Improving Cloud Application Performance

Optimizing the performance of cloud-based applications requires a multi-faceted approach. Careful consideration of several key areas is crucial for achieving optimal speed and responsiveness. Database optimization, for example, involves techniques like query optimization, caching, and database sharding to improve data retrieval times. Efficient code practices, such as minimizing latency-inducing operations and utilizing appropriate data structures, are also vital. Content Delivery Networks (CDNs) can significantly reduce latency by caching static content closer to users geographically, resulting in faster load times. Load balancing distributes traffic across multiple servers, preventing any single server from becoming overloaded and ensuring consistent application responsiveness. Finally, selecting the appropriate instance types for your application’s workload, considering factors such as CPU, memory, and storage needs, is paramount for optimal performance.

Designing a Scalable Architecture for a High-Traffic Web Application

Designing a scalable architecture for a high-traffic web application using cloud servers involves a strategic approach. A common pattern is to employ a microservices architecture, breaking down the application into smaller, independent services. This modularity allows for independent scaling of individual services based on their specific needs, maximizing efficiency and minimizing resource waste. For example, a service handling user authentication might require fewer resources than a service processing image uploads. Load balancing is essential, distributing incoming traffic across multiple instances of each microservice. A robust caching strategy, leveraging both server-side caching (e.g., Redis) and CDN caching, minimizes database load and improves response times. Database scaling can be achieved through techniques like read replicas to handle read-heavy workloads and sharding to distribute data across multiple databases. Monitoring and logging are critical components, providing insights into application performance and enabling proactive identification and resolution of potential bottlenecks. A well-designed architecture also incorporates autoscaling features to automatically adjust resources based on real-time demand, ensuring optimal performance and resource utilization. For instance, a surge in traffic during a promotional campaign could trigger automatic scaling to accommodate the increased load without impacting user experience.

Deployment and Management of Cloud Servers

Deploying and managing cloud servers effectively is crucial for ensuring the smooth operation and scalability of your applications. This involves a careful consideration of various factors, from initial setup and application deployment to ongoing monitoring and resource optimization. Efficient management translates directly to cost savings and improved application performance.

Deploying a Web Application to a Cloud Server

Deploying a web application involves several steps, starting with preparing your application code and configuring the server environment. The specific steps may vary depending on the chosen cloud provider (AWS, Azure, Google Cloud, etc.) and the type of application. However, common steps include code packaging, deployment via tools like Git or FTP, database configuration, and security hardening. Consider using automated deployment tools for efficiency and reduced error rates.

Methods for Managing and Monitoring Cloud Server Resources

Cloud providers offer a range of tools for managing and monitoring server resources. These tools typically provide real-time insights into CPU usage, memory consumption, network traffic, and disk I/O. They also offer features for automated scaling, allowing resources to be dynamically adjusted based on demand. Popular methods include using the cloud provider’s console, command-line interfaces (CLIs), and third-party monitoring tools that integrate with the cloud platform. Setting up alerts for critical metrics helps proactively address potential issues. For example, AWS offers CloudWatch, Azure offers Azure Monitor, and Google Cloud offers Cloud Monitoring. These tools provide dashboards, visualizations, and automated alerts.

Setting Up a Basic Cloud Server Instance

Setting up a basic cloud server instance is a straightforward process, generally involving these steps:

- Choose a Cloud Provider: Select a cloud provider based on your needs (AWS, Azure, Google Cloud, etc.). Each provider offers different pricing models and features.

- Select an Instance Type: Choose a virtual machine (VM) instance type based on your application’s resource requirements (CPU, memory, storage). Consider factors like cost and performance.

- Choose an Operating System: Select the operating system (OS) that your application requires (e.g., Linux, Windows). This will determine the environment your application runs in.

- Configure Storage: Choose the appropriate storage type (e.g., SSD, HDD) and size based on your application’s data storage needs. Consider using managed databases for enhanced reliability.

- Configure Networking: Set up networking parameters, including selecting a virtual private cloud (VPC) and configuring security groups to control network access to your instance. This ensures only authorized traffic can access your server.

- Connect to the Instance: Connect to your newly created instance using SSH (for Linux) or RDP (for Windows) to begin configuration and application deployment. This allows you to remotely manage your server.

- Install Necessary Software: Install any required software, such as a web server (Apache, Nginx), database server (MySQL, PostgreSQL), and programming language runtimes. This sets up the environment for your application.

- Deploy Your Application: Deploy your web application to the server using appropriate methods (e.g., Git, FTP, deployment tools). This makes your application accessible.

Data Backup and Recovery in Cloud Environments

Data backup and recovery are critical aspects of maintaining business continuity and ensuring data integrity when using cloud-based servers. A robust strategy safeguards against data loss from various sources, including hardware failures, software glitches, cyberattacks, and human error. This section explores different backup strategies, disaster recovery planning, and the creation of a comprehensive data backup and recovery plan for a critical business application.

Different Backup Strategies for Cloud Servers

Several strategies exist for backing up data on cloud servers, each offering varying levels of protection and recovery time objectives (RTOs) and recovery point objectives (RPOs). The choice depends on factors such as the criticality of the data, budget, and recovery requirements. These strategies often leverage the cloud provider’s built-in services or third-party backup solutions.

Disaster Recovery Planning for Cloud-Based Systems

Disaster recovery planning for cloud-based systems involves outlining procedures to ensure business continuity in the event of a major disruption. This plan should detail the steps to recover critical applications and data, including failover mechanisms to redundant systems or geographically dispersed data centers. The plan must consider various disaster scenarios, such as natural disasters, cyberattacks, and infrastructure failures. Regular testing of the disaster recovery plan is crucial to validate its effectiveness and identify areas for improvement. This testing should simulate real-world scenarios to ensure the plan’s practicality and efficiency.

Data Backup and Recovery Plan for a Critical Business Application

Consider a critical business application, such as an e-commerce platform, hosted on a cloud server using Amazon Web Services (AWS). A comprehensive data backup and recovery plan would incorporate the following elements:

| Backup Type | Frequency | Storage Location | Retention Policy | Recovery Process |

|---|---|---|---|---|

| Full Backup | Weekly | Amazon S3 Glacier Deep Archive (for long-term retention) and Amazon S3 Standard (for quicker access) | Retain for 3 years | Restore from S3 Standard for rapid recovery; restore from Glacier for longer-term recovery needs. |

| Incremental Backups | Daily | Amazon S3 Standard | Retain for 30 days | Restore from incremental backups in conjunction with the latest full backup. |

| Transaction Logs | Continuously | Amazon S3 Standard | Retain for 7 days | Point-in-time recovery using transaction logs. |

The plan also includes:

- Regular testing of the backup and recovery process to ensure its effectiveness.

- Offsite storage of backups in a geographically separate region for disaster recovery.

- A documented procedure for notifying relevant personnel in case of a data loss event.

- A clearly defined escalation path for handling critical issues.

- Regular review and updates to the plan to account for changes in the application or infrastructure.

This plan prioritizes both short-term and long-term data recovery, leveraging different storage tiers within AWS for optimal cost and recovery time. The inclusion of transaction logs allows for near real-time recovery, minimizing data loss in case of an incident. Regular testing ensures that the plan remains effective and adaptable to evolving needs.

Cloud Server Monitoring and Logging

Effective monitoring and logging are crucial for maintaining the health, performance, and security of cloud-based servers. A robust system allows for proactive identification of issues, efficient troubleshooting, and informed decision-making regarding resource allocation and scaling. Without a comprehensive approach, unexpected downtime, performance bottlenecks, and security breaches become far more likely.

Proactive monitoring and detailed logging provide invaluable insights into server behavior, enabling administrators to anticipate and address potential problems before they impact users or applications. This preventative approach minimizes disruptions and ensures optimal performance.

Key Metrics for Monitoring Cloud Server Performance

Monitoring key performance indicators (KPIs) is essential for understanding the health and efficiency of your cloud servers. These metrics provide a comprehensive overview of resource utilization and application performance, enabling timely intervention to prevent potential issues. Regularly reviewing these metrics allows for informed capacity planning and optimization of resource allocation.

- CPU Utilization: Tracks the percentage of CPU resources being used. High and sustained CPU utilization may indicate the need for additional processing power.

- Memory Usage: Monitors the amount of RAM being consumed. Consistent high memory usage suggests potential memory leaks or the need for more RAM.

- Disk I/O: Measures the rate of data read and write operations to storage. High disk I/O can indicate slow storage performance and potential bottlenecks.

- Network Traffic: Tracks the volume of incoming and outgoing network data. Unusually high network traffic might suggest a security issue or a performance bottleneck.

- Application Performance: Measures response times, error rates, and throughput of applications running on the server. Slow response times or high error rates indicate performance issues within the application.

The Importance of Logging and its Role in Troubleshooting

Comprehensive logging plays a vital role in identifying and resolving issues within a cloud-based application. Logs provide a detailed record of events, errors, and system activities, allowing administrators to trace the root cause of problems and implement effective solutions. The value of logging extends beyond immediate troubleshooting; it aids in capacity planning, security auditing, and compliance efforts.

Effective logging involves capturing relevant information about system events, application errors, and user activity. This data should be stored securely and be easily searchable for efficient troubleshooting. Different log levels (e.g., DEBUG, INFO, WARN, ERROR) allow for filtering and prioritization of information. For instance, a high volume of ERROR logs might indicate a critical problem requiring immediate attention, while DEBUG logs can assist in pinpointing the origin of less severe issues.

Designing a Comprehensive Monitoring and Logging System for a Cloud-Based Application

A comprehensive monitoring and logging system should integrate various tools and technologies to provide a holistic view of the cloud environment. The design should consider scalability, security, and ease of use.

A robust system would incorporate:

- Centralized Logging Platform: A platform capable of aggregating logs from multiple sources (servers, applications, databases) into a single, searchable repository. Examples include ELK stack (Elasticsearch, Logstash, Kibana) or Splunk.

- Real-time Monitoring Dashboards: Dashboards that display key performance indicators in real-time, allowing for immediate identification of potential problems. Tools like Grafana or Datadog can be used to create custom dashboards.

- Automated Alerting System: A system that automatically sends alerts (e.g., email, SMS) when critical thresholds are exceeded. This ensures timely intervention and minimizes downtime.

- Log Management and Analysis Tools: Tools to efficiently search, filter, and analyze logs to identify patterns and root causes of problems. This might involve using query languages like the one provided by Elasticsearch.

- Security Information and Event Management (SIEM): A system for collecting, analyzing, and managing security-related logs. This helps to detect and respond to security threats.

Integration with Other Cloud Services

The power of cloud-based servers is significantly amplified through seamless integration with other cloud services. This interconnectedness allows for the creation of robust and scalable applications, leveraging the strengths of specialized services without the complexities of managing them individually. Effective integration streamlines workflows, improves efficiency, and unlocks new possibilities for data management and application development.

Cloud server integration with other cloud services, such as databases, storage solutions, and messaging platforms, offers numerous benefits. These benefits extend beyond simple connectivity, impacting aspects like scalability, security, cost-effectiveness, and overall application performance. A well-integrated cloud infrastructure provides a flexible and responsive environment capable of adapting to changing demands.

Benefits of Integrating Cloud Services

Integrating cloud services offers several key advantages. Reduced operational overhead is a significant benefit; managing multiple independent systems is complex and time-consuming. Integration simplifies this, allowing administrators to focus on core applications rather than infrastructure management. Improved scalability is another key advantage; as application needs grow, integrated services can easily scale resources up or down, ensuring optimal performance without manual intervention. Enhanced security is also a considerable benefit; many cloud services incorporate robust security measures, and integration can leverage these features to create a more secure environment overall. Finally, cost optimization is achievable through integrated services; by utilizing pay-as-you-go models and optimizing resource allocation across integrated systems, organizations can reduce their overall cloud spending.

Examples of Cloud Server Integration with SaaS Applications

Many Software as a Service (SaaS) applications rely heavily on cloud server integration. For example, a customer relationship management (CRM) system like Salesforce can integrate with a cloud-based server to process and store customer data, using a cloud database like Amazon RDS for persistent storage. The CRM application can then leverage the server’s computational power to perform complex analyses or generate reports. Similarly, a cloud-based server can act as a middleware connecting a company’s internal systems with external SaaS platforms, such as marketing automation tools (e.g., HubSpot) or e-commerce platforms (e.g., Shopify). This integration facilitates data exchange and automates workflows between different applications, improving overall business processes. Another example involves a cloud-based server acting as an API gateway, securely managing access to various cloud services used by a mobile application. The server would handle authentication, authorization, and routing of requests between the mobile app and different backend services, such as a cloud database, a payment gateway, and a notification service. This approach enhances security and improves the overall performance and reliability of the mobile application.

Choosing the Right Cloud Server for a Specific Application

Selecting the appropriate cloud server for a specific application is crucial for ensuring optimal performance, scalability, security, and cost-effectiveness. The decision hinges on a careful evaluation of several key factors related to the application’s needs and the capabilities of different cloud server types. A mismatch can lead to underperformance, security vulnerabilities, or excessive costs.

Choosing the right cloud server involves understanding the application’s requirements and aligning them with the features offered by various cloud providers. This includes considering factors such as compute power, storage needs, network bandwidth, and the level of security required. Furthermore, understanding the trade-offs between different server types, such as virtual machines and containers, is essential for making an informed decision.

Factors to Consider When Selecting a Cloud Server

Several critical factors influence the choice of a cloud server. These factors need careful consideration to ensure the chosen server meets the application’s demands effectively.

- Application Requirements: The application’s computational needs (CPU, memory, storage), network bandwidth requirements, and expected traffic volume directly impact the server specifications. A resource-intensive application, such as a video rendering service, will necessitate a more powerful server than a simple website.

- Scalability Needs: The ability to easily scale resources up or down as demand fluctuates is a key advantage of cloud computing. Consider whether the application needs to handle sudden traffic spikes or if its resource needs are relatively static.

- Security Requirements: The sensitivity of the data processed by the application dictates the level of security needed. This includes factors such as data encryption, access control, and compliance with relevant regulations (e.g., HIPAA, GDPR).

- Budget Constraints: Cloud computing offers various pricing models. Understanding these models and aligning them with the budget is vital for cost-effective deployment. Consider factors like on-demand pricing, reserved instances, and spot instances.

- Integration Needs: The application’s need to integrate with other services or systems should be considered. This might involve database integration, message queues, or other third-party APIs.

Comparison of Virtual Machines and Containers

Virtual machines (VMs) and containers represent two distinct approaches to cloud server deployment, each with its own advantages and disadvantages.

| Feature | Virtual Machine | Container |

|---|---|---|

| Resource Isolation | High: Each VM has its own operating system and resources. | Lower: Containers share the host OS kernel, leading to improved resource efficiency. |

| Portability | Relatively low: VM images can be portable, but dependencies on specific hypervisors can limit portability. | High: Containers are highly portable due to their image-based nature and minimal dependencies. |

| Boot Time | Longer: VMs require booting an entire operating system. | Faster: Containers start almost instantaneously. |

| Resource Consumption | Higher: VMs consume more resources due to the overhead of the operating system. | Lower: Containers are more lightweight and efficient. |

| Security | Stronger isolation between VMs improves security. | Requires careful consideration of security measures due to shared OS kernel. |

Optimal Cloud Server Configuration for an E-commerce Platform

For a hypothetical e-commerce platform, an optimal cloud server configuration would likely involve a combination of services. The frontend (website) could be deployed using a highly scalable and available service like a load-balanced group of containers orchestrated by Kubernetes. This allows for easy scaling to handle traffic spikes during peak shopping periods. The backend (database, order processing, etc.) might utilize a managed database service like Amazon RDS or Google Cloud SQL for reliability and scalability. A combination of virtual machines and containers could be used, with VMs for resource-intensive tasks and containers for microservices that require faster deployment and scaling. Redundancy and disaster recovery mechanisms, such as geographically distributed deployments and backups, would be crucial for high availability and data protection. The specific instance types (CPU, memory, storage) would be determined by projected traffic and transaction volume, adjusting dynamically based on real-time demand.

Cloud Server Migration Strategies

Migrating applications to a cloud-based server environment offers numerous benefits, including increased scalability, enhanced flexibility, and reduced infrastructure costs. However, a well-planned migration strategy is crucial for a successful transition. This section explores different approaches to cloud migration, the associated challenges, and a sample migration plan.

Different approaches exist for migrating applications to the cloud, each with its own advantages and disadvantages. The optimal approach depends on factors such as application complexity, existing infrastructure, budget, and desired downtime.

Migration Approaches

Several key migration approaches can be employed, each suited to different circumstances. A phased approach, for example, allows for a more controlled and less disruptive transition. A “big bang” approach, while faster, carries a higher risk.

- Phased Migration: This approach involves migrating applications or components in stages, minimizing disruption to ongoing operations. It allows for thorough testing and validation at each stage, reducing the risk of unforeseen issues. For example, a company might start by migrating non-critical applications before moving on to core systems.

- Big Bang Migration: This involves migrating the entire application environment at once. It is faster but riskier, requiring significant planning and testing to minimize downtime and potential data loss. This approach is best suited for smaller, less complex applications where downtime can be tolerated.

- Hybrid Migration: This strategy involves a combination of on-premises and cloud-based infrastructure. Some applications or components remain on-premises while others are migrated to the cloud. This allows organizations to gradually transition to the cloud while maintaining operational continuity.

- Rehosting (Lift and Shift): This involves moving applications to the cloud with minimal or no code changes. It is a relatively quick and cost-effective approach, but it may not fully leverage the benefits of the cloud.

- Replatforming: This involves making some changes to the application to optimize its performance in the cloud environment. This can improve scalability and efficiency but requires more effort than rehosting.

- Refactoring: This involves redesigning the application to take full advantage of cloud-native services. This approach offers the greatest potential benefits but requires significant effort and resources.

- Repurchasing: This involves replacing existing applications with cloud-based SaaS solutions. This can be a cost-effective approach, but it requires careful evaluation of available SaaS offerings.

- Retiring: This involves decommissioning applications that are no longer needed. This can free up resources and reduce costs.

Challenges in Cloud Server Migration

Migrating applications to the cloud presents several challenges. Careful planning and risk mitigation strategies are essential for a successful transition. These challenges often require significant expertise and resources to overcome effectively.

- Data Migration: Moving large amounts of data to the cloud can be time-consuming and complex, requiring careful planning and execution to minimize downtime and ensure data integrity. Strategies such as data compression and incremental backups can help manage this process. Consider potential network bandwidth limitations and associated costs.

- Application Compatibility: Ensuring that applications are compatible with the cloud environment can be challenging. Testing and validation are crucial to identify and resolve any compatibility issues. Thorough testing should include both functional and performance testing.

- Security Considerations: Security is a paramount concern during cloud migration. Organizations need to ensure that their data and applications are secure in the cloud environment. This includes implementing appropriate security measures such as access controls, encryption, and regular security audits.

- Cost Management: Cloud computing costs can be unpredictable if not carefully managed. Organizations need to develop a clear understanding of their cloud spending and implement strategies to optimize their cloud costs. This includes utilizing cost management tools and implementing strategies such as right-sizing instances.

- Downtime and Business Continuity: Minimizing downtime during migration is crucial. A well-defined migration plan with thorough testing and rollback strategies is essential to ensure business continuity.

Sample Migration Plan: On-Premises to Cloud

This plan Artikels a phased approach to migrating an on-premises application to a cloud server. This example assumes a three-phase process, but the number of phases may vary depending on the application’s complexity.

| Phase | Activities | Timeline | Dependencies |

|---|---|---|---|

| Phase 1: Assessment and Planning | Application assessment, cloud provider selection, infrastructure planning, security assessment, risk assessment, data migration planning | 4 weeks | None |

| Phase 2: Migration and Testing | Data migration, application deployment, testing (functional, performance, security), configuration management | 8 weeks | Completion of Phase 1 |

| Phase 3: Go-Live and Monitoring | Cutover, post-migration monitoring, performance optimization, ongoing maintenance | 4 weeks | Completion of Phase 2 |

Future Trends in Cloud-Based Servers

The landscape of cloud-based server technology is constantly evolving, driven by increasing demands for scalability, performance, security, and cost-effectiveness. Several key trends are shaping the future of this crucial infrastructure, impacting how businesses design, deploy, and manage their applications. These trends represent significant shifts in how we approach computing and data management.

Emerging trends in cloud server technology include advancements in edge computing, the expansion of serverless architectures, the increasing adoption of artificial intelligence (AI) and machine learning (ML) for infrastructure management, and the growth of sustainable cloud solutions. These developments are intertwined and collectively redefine the capabilities and limitations of cloud-based servers.

Edge Computing’s Growing Influence

Edge computing is rapidly gaining traction, moving processing power and data storage closer to the source of data generation. This reduces latency, improves bandwidth efficiency, and enables real-time processing for applications like IoT devices, autonomous vehicles, and augmented reality experiences. For example, a smart city utilizing edge computing can process sensor data locally, reducing the reliance on cloud servers for immediate responses and improving the responsiveness of traffic management systems. The integration of edge computing with cloud servers creates a hybrid model where edge devices handle immediate processing needs while the cloud manages larger-scale data storage and analysis.

The Impact of Serverless Computing

Serverless computing represents a paradigm shift, abstracting away the management of servers entirely. Developers focus solely on writing and deploying code, while the cloud provider handles the underlying infrastructure, scaling resources automatically based on demand. This significantly reduces operational overhead and allows for more efficient resource utilization. Companies like Netflix and AWS heavily utilize serverless functions for tasks such as image processing and real-time data analysis, demonstrating its scalability and cost-effectiveness. The transition to serverless architectures is driven by the need for faster development cycles and reduced operational complexity.

Predictions for the Future of Cloud Server Infrastructure

The future of cloud-based server infrastructure will likely be characterized by increased automation, improved security measures, and a greater emphasis on sustainability. We can anticipate further advancements in AI-driven resource management, leading to more efficient allocation and optimization of computing resources. For instance, predictive analytics can proactively scale resources based on anticipated demand, preventing performance bottlenecks and minimizing costs. Furthermore, the adoption of more energy-efficient hardware and renewable energy sources will play a crucial role in creating environmentally sustainable cloud infrastructure. The rise of quantum computing also presents a long-term potential for significantly enhancing processing power and tackling complex computational problems currently beyond the capabilities of classical computers, though its integration into mainstream cloud infrastructure remains a longer-term prospect.

General Inquiries

What are the different types of cloud server deployments?

The main types are public clouds (shared resources), private clouds (dedicated resources), and hybrid clouds (a combination of both).

How do I choose the right cloud server size?

Consider your application’s resource needs (CPU, RAM, storage) and expected traffic. Start with a smaller instance and scale up as needed.

What is serverless computing?

Serverless computing allows you to run code without managing servers directly. The cloud provider handles the infrastructure.

What are the security implications of using a cloud server?

Security is paramount. Implement strong passwords, utilize firewalls, regularly update software, and follow best practices for data encryption and access control.

How much does a cloud server cost?

Cloud server costs vary significantly based on the provider, instance size, storage, and usage. Most providers offer pay-as-you-go models.