Defining Cloud Server Infrastructure

Cloud server infrastructure represents the underlying hardware and software resources that power cloud computing services. It encompasses a complex network of interconnected components working together to provide on-demand access to computing resources, such as servers, storage, and networking, over the internet. This allows businesses and individuals to access and utilize these resources without the need for significant upfront investment in physical hardware or dedicated IT staff.

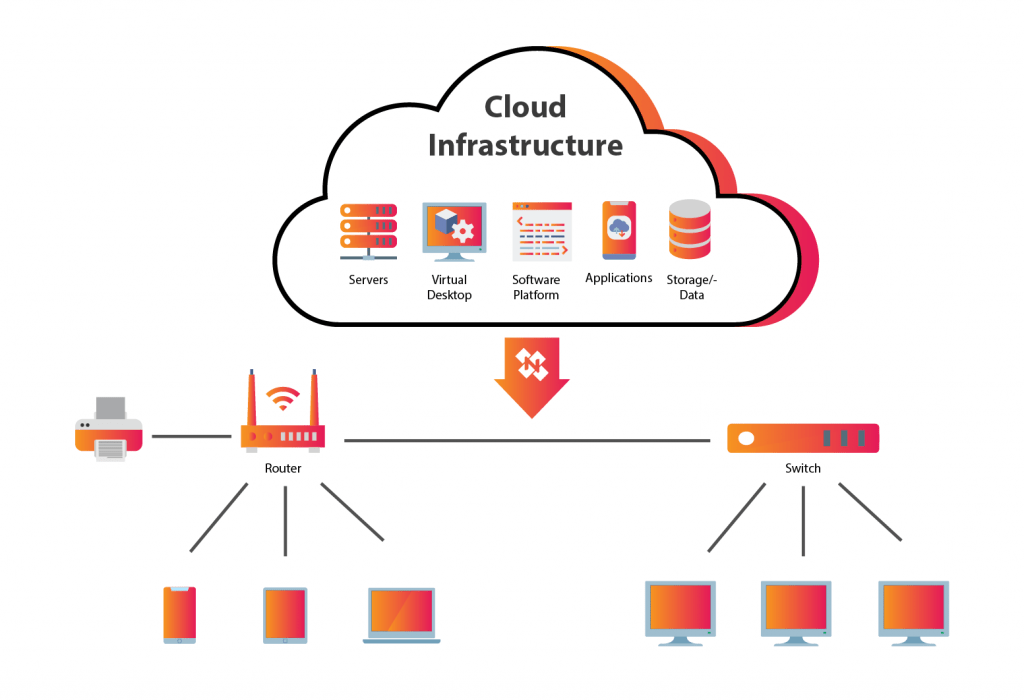

Core Components of Cloud Server Infrastructure

The foundation of cloud server infrastructure rests on several key components. These components work in concert to deliver the scalability, flexibility, and reliability expected from cloud services. These include physical servers, network infrastructure (including routers, switches, and firewalls), virtualization technologies (allowing multiple virtual servers to run on a single physical server), storage systems (ranging from local disks to distributed object storage), and management software (responsible for monitoring, provisioning, and maintaining the entire infrastructure). The interplay between these components ensures the smooth and efficient operation of cloud services.

Cloud Deployment Models

Cloud deployment models dictate where and how cloud resources are deployed and managed. Each model offers distinct advantages and disadvantages, influencing factors such as security, control, and cost.

- Public Cloud: Resources are provided by a third-party provider (e.g., Amazon Web Services, Microsoft Azure, Google Cloud Platform) and shared among multiple users. This model offers high scalability and cost-effectiveness due to shared infrastructure, but may raise concerns regarding data security and control.

- Private Cloud: Resources are dedicated exclusively to a single organization, typically deployed within the organization’s own data center or a colocation facility. This offers enhanced security and control, but necessitates higher upfront investment and ongoing maintenance costs.

- Hybrid Cloud: Combines elements of both public and private clouds, allowing organizations to leverage the benefits of both models. Sensitive data or critical applications can be hosted on a private cloud, while less sensitive workloads can be run on a public cloud, optimizing cost and performance.

- Multi-cloud: Utilizes multiple public cloud providers to distribute workloads and mitigate vendor lock-in. This approach enhances resilience and flexibility, but requires managing multiple environments and potentially integrating disparate systems.

Comparison of IaaS, PaaS, and SaaS

IaaS, PaaS, and SaaS represent different service models within the cloud computing paradigm, each offering varying levels of abstraction and control. The choice depends on an organization’s technical expertise, budget, and specific application requirements.

| Feature | IaaS (Infrastructure as a Service) | PaaS (Platform as a Service) | SaaS (Software as a Service) |

|---|---|---|---|

| Abstraction Level | Low – Users manage operating systems, applications, and middleware. | Medium – Users manage applications and data, but the provider manages the underlying infrastructure and platform. | High – Users only interact with the application itself; the provider manages everything else. |

| Examples | Amazon EC2, Microsoft Azure Virtual Machines, Google Compute Engine | Google App Engine, AWS Elastic Beanstalk, Heroku | Salesforce, Microsoft Office 365, Google Workspace |

| Control | High | Medium | Low |

| Cost | Generally lower upfront, but requires more management overhead. | Higher than IaaS, but lower than SaaS, with less management overhead. | Highest upfront cost, but lowest management overhead. |

Security in Cloud Server Infrastructure

Cloud server infrastructure, while offering numerous advantages in terms of scalability and cost-effectiveness, presents unique security challenges. Understanding and mitigating these risks is crucial for maintaining data integrity, ensuring business continuity, and protecting sensitive information. A robust security posture requires a multi-layered approach encompassing preventative measures, detection mechanisms, and incident response planning.

Common Security Threats and Vulnerabilities

Cloud environments are susceptible to a range of threats, many stemming from the shared responsibility model inherent in cloud computing. This model divides security responsibilities between the cloud provider and the customer. Understanding this division is vital for effective security management. Common threats include data breaches resulting from misconfigurations, insider threats, denial-of-service (DoS) attacks targeting application availability, malware infections compromising server integrity, and unauthorized access due to weak authentication mechanisms. Vulnerabilities often arise from outdated software, insecure configurations of virtual machines (VMs), and insufficient network security. For example, a misconfigured security group on a virtual machine could inadvertently expose sensitive ports to the public internet, leaving the server vulnerable to exploitation.

Best Practices for Securing Cloud Server Infrastructure

Securing cloud infrastructure necessitates a proactive approach that integrates various security controls. Access control is paramount, employing principles of least privilege to grant only necessary permissions to users and applications. This limits the potential damage from compromised accounts. Multi-factor authentication (MFA) significantly strengthens authentication, adding an extra layer of security beyond passwords. Data encryption, both in transit and at rest, protects sensitive data from unauthorized access, even if a breach occurs. This includes encrypting databases, storage services, and data transmitted across networks using protocols like TLS/SSL. Regular security audits and penetration testing identify vulnerabilities before malicious actors can exploit them. Keeping software and operating systems up-to-date with the latest security patches is critical in mitigating known vulnerabilities. Implementing robust logging and monitoring capabilities enables early detection of suspicious activities and facilitates rapid incident response. Finally, a well-defined incident response plan Artikels procedures for handling security incidents, minimizing their impact and ensuring swift recovery.

Security Architecture for a Hypothetical Cloud-Based Application

Consider a hypothetical e-commerce application deployed on a cloud platform like AWS. The application’s security architecture would incorporate several key elements. A virtual private cloud (VPC) would isolate the application’s resources from other tenants on the platform. Security groups and network access control lists (ACLs) would restrict network traffic based on pre-defined rules, allowing only authorized access to specific ports and services. Web application firewalls (WAFs) would protect the application from common web-based attacks such as SQL injection and cross-site scripting (XSS). Data encryption at rest would be implemented using services like AWS KMS, while data in transit would be secured using TLS/SSL. Regular vulnerability scanning and penetration testing would be performed to identify and address potential security weaknesses. Intrusion detection and prevention systems (IDS/IPS) would monitor network traffic for malicious activity, and logging and monitoring tools would provide real-time visibility into the application’s security posture. Finally, a robust incident response plan would detail procedures for handling security incidents, including steps for containment, eradication, and recovery. This multi-layered approach ensures a comprehensive security posture for the e-commerce application.

Scalability and Elasticity in Cloud Server Infrastructure

Cloud server infrastructure offers significant advantages over traditional on-premises solutions, primarily due to its inherent scalability and elasticity. These features allow businesses to dynamically adjust their computing resources to meet fluctuating demands, optimizing costs and ensuring performance. This adaptability is a key differentiator, enabling businesses to react quickly to changing market conditions and growth opportunities.

Cloud server infrastructure enables scalability and elasticity through virtualization and resource pooling. Virtualization allows for the creation of multiple virtual servers from a single physical server, while resource pooling enables the dynamic allocation of resources (compute, storage, network) across multiple virtual servers as needed. This means businesses can easily scale up or down their resources, adding or removing virtual servers as demand fluctuates, without the need for significant upfront investment in hardware or lengthy provisioning times. This flexibility contrasts sharply with traditional infrastructure, where scaling often requires significant lead time and capital expenditure.

Examples of Scalability and Elasticity in Business Applications

Businesses across various sectors leverage the scalability and elasticity of cloud infrastructure to their advantage. E-commerce companies, for instance, often experience significant spikes in traffic during peak shopping seasons like Black Friday or Cyber Monday. By utilizing autoscaling features, they can automatically provision additional servers to handle the increased load, ensuring a smooth and responsive shopping experience for customers. Similarly, streaming services use autoscaling to manage the fluctuating demands of concurrent viewers, dynamically allocating more resources during peak viewing times and scaling down during periods of low activity. Software-as-a-Service (SaaS) providers rely on scalability to accommodate a growing customer base and maintain high service availability. These providers can quickly add resources to handle increased user activity and data storage needs, adapting to the evolving demands of their business.

Hypothetical Scenario: Autoscaling a Website During a Marketing Campaign

Imagine a small business launching a new marketing campaign expected to significantly increase website traffic. Before implementing autoscaling, the website relies on a single virtual server with limited resources. During the campaign launch, the website experiences a sudden surge in traffic, resulting in slow loading times and potential downtime.

However, with autoscaling enabled, the cloud platform automatically detects the increased traffic and provisions additional virtual servers to distribute the load. This ensures that the website remains responsive and accessible to all users, even during peak traffic periods. The following table illustrates the resource utilization before and after autoscaling:

| Metric | Before Autoscaling | After Autoscaling | Difference |

|---|---|---|---|

| CPU Utilization | 95% | 30% | -65% |

| Memory Utilization | 98% | 45% | -53% |

| Request Response Time | 10 seconds | 0.5 seconds | -9.5 seconds |

| Number of Servers | 1 | 5 | +4 |

Cost Optimization in Cloud Server Infrastructure

Managing cloud costs effectively is crucial for maintaining a healthy budget and maximizing the return on investment in cloud services. Uncontrolled spending can quickly escalate, impacting profitability. This section explores strategies for optimizing cloud infrastructure costs, examining pricing models and server configurations to achieve cost-effectiveness.

Strategies for Optimizing Cloud Server Infrastructure Costs

Effective cost optimization requires a multifaceted approach. It involves proactive planning, regular monitoring, and the strategic utilization of cloud provider features. This includes right-sizing instances, leveraging spot instances, and optimizing storage solutions.

Cloud Provider Pricing Models

Major cloud providers offer a variety of pricing models designed to cater to different needs and budgets. Understanding these models is key to selecting the most cost-effective option for your specific workload.

- Pay-as-you-go: This model charges you only for the resources you consume, offering flexibility and scalability. It’s ideal for unpredictable workloads or projects with varying resource requirements. However, costs can fluctuate significantly depending on usage.

- Reserved Instances: These offer significant discounts compared to pay-as-you-go pricing in exchange for committing to a specific instance type and duration (e.g., one or three years). Reserved Instances are cost-effective for predictable, long-term workloads. The upfront commitment reduces the overall cost per hour significantly.

- Savings Plans: Savings Plans provide a discount on compute usage based on a commitment to a certain amount of compute usage per month or year. This provides flexibility compared to reserved instances, as you don’t need to specify instance types. Savings plans are a good choice for workloads with consistent but not necessarily predictable usage.

- Spot Instances: Spot instances are spare compute capacity offered at significantly reduced prices. They are ideal for fault-tolerant, flexible workloads that can handle interruptions. The trade-off is that instances can be terminated with short notice.

Cost-Effectiveness of Different Cloud Server Configurations

The cost-effectiveness of cloud server configurations depends on various factors, including instance type, operating system, storage, and networking. Choosing the right configuration is essential for optimizing costs without compromising performance.

| Configuration | Cost Considerations | Suitable Workloads |

|---|---|---|

| Small, single-core instance with basic storage | Lower initial cost, but may lack performance for demanding applications | Low-traffic websites, small development environments |

| Large, multi-core instance with high-performance storage | Higher initial cost, but provides greater performance and scalability | High-traffic websites, large-scale applications, database servers |

| Containerized environment using Kubernetes | Can optimize resource utilization and reduce costs by only allocating resources when needed | Microservices architectures, applications requiring high scalability and flexibility |

Cloud Server Infrastructure Management

Effective management of cloud server infrastructure is crucial for maintaining operational efficiency, ensuring security, and optimizing costs. This involves leveraging a combination of tools, techniques, and automation strategies to monitor, control, and scale resources dynamically. Proper management minimizes downtime, enhances performance, and simplifies complex tasks.

Tools and Techniques for Managing Cloud Server Infrastructure

Cloud providers offer a range of integrated management tools, including dashboards, APIs, and command-line interfaces (CLIs). These tools provide visibility into resource utilization, performance metrics, and security posture. Third-party tools further enhance management capabilities, offering features like automated monitoring, centralized logging, and configuration management. Examples include Ansible, Chef, Puppet, and Terraform for infrastructure-as-code. Monitoring tools like Datadog, Prometheus, and Grafana provide real-time insights into system health and performance. These tools allow administrators to proactively identify and address potential issues, improving overall system stability and reliability.

Automation Techniques for Managing Cloud Resources

Automation is key to efficient cloud server infrastructure management. Infrastructure-as-code (IaC) tools like Terraform and CloudFormation allow administrators to define and manage infrastructure through code, enabling repeatable deployments and configuration management. Configuration management tools like Ansible, Chef, and Puppet automate the configuration of servers and applications, ensuring consistency across environments. These tools reduce manual intervention, minimizing human error and improving efficiency. For example, Ansible can automate the deployment of a web application across multiple servers, ensuring each server is configured identically. This automation significantly reduces deployment time and ensures consistency. Automated scaling based on resource utilization further enhances efficiency by dynamically adjusting resources to meet demand.

Deploying a Web Application on a Cloud Server

Deploying a web application involves several steps, each requiring careful planning and execution. This process can be significantly streamlined through automation.

- Provisioning the Server: Use a cloud provider’s console or API to create a virtual machine (VM) with the necessary specifications (CPU, memory, storage, operating system). This process can be automated using IaC tools.

- Setting up Networking: Configure network settings, including IP addresses, security groups (firewalls), and load balancers. Again, automation tools can simplify this.

- Installing Software Dependencies: Install the necessary software components, such as a web server (Apache, Nginx), database (MySQL, PostgreSQL), and programming language runtime (Python, Node.js). This step is highly amenable to automation using package managers and configuration management tools.

- Deploying the Application Code: Upload the application code to the server. This can be automated using tools like Git and deployment pipelines such as Jenkins or GitLab CI/CD.

- Configuring the Application: Configure the application to connect to the database and other necessary services. Configuration management tools can help ensure consistency across deployments.

- Testing and Monitoring: Thoroughly test the application to ensure it functions correctly. Implement monitoring tools to track performance and identify potential issues.

High Availability and Disaster Recovery in Cloud Server Infrastructure

High availability and disaster recovery are critical aspects of any robust cloud server infrastructure. They ensure business continuity by minimizing downtime and data loss in the event of unexpected outages or disasters. Strategies implemented must account for various failure points, from hardware malfunctions to natural disasters, and encompass preventative measures and recovery procedures.

Ensuring continuous operation requires a multi-faceted approach leveraging redundancy and failover mechanisms to maintain service uptime. This involves proactively designing systems to withstand failures and implementing procedures to quickly restore services should a failure occur. A well-defined disaster recovery plan is essential for minimizing disruption and ensuring a swift return to normal operations.

Redundancy and Failover Mechanisms

Redundancy is the cornerstone of high availability. It involves creating duplicate systems or components so that if one fails, another can immediately take over. This can apply to servers, networks, storage, and databases. For example, a redundant server setup might involve two identical servers running the same application, with a load balancer distributing traffic between them. If one server fails, the load balancer automatically redirects traffic to the other, ensuring continuous service. Failover mechanisms are automated processes that switch operation from a primary system to a secondary system in the event of a failure. These mechanisms typically involve sophisticated monitoring systems that detect failures and trigger the automatic switchover. The speed and efficiency of failover are crucial in minimizing downtime. A well-designed failover system will ensure a seamless transition with minimal disruption to users.

Designing a Disaster Recovery Plan for a Cloud-Based Application

A comprehensive disaster recovery plan for a cloud-based application must detail procedures for backing up and restoring data and applications. This plan should define roles and responsibilities, recovery time objectives (RTOs), and recovery point objectives (RPOs). RTO specifies the maximum acceptable downtime after an outage, while RPO defines the maximum acceptable data loss. For example, a financial institution might have a very low RTO and RPO, while a blog might tolerate a higher RTO and RPO. The plan should Artikel specific steps for data backup, including frequency, location, and method of storage. This might involve using cloud-based backup services, replicating data to multiple regions, or utilizing a combination of both. The restoration procedure should be clearly defined, specifying the steps to recover data and applications from backups and restore services to a functional state. Regular testing of the disaster recovery plan is crucial to ensure its effectiveness and identify any weaknesses. This testing should simulate various failure scenarios, allowing for adjustments and improvements to the plan. A realistic disaster recovery drill, involving key personnel, will highlight potential bottlenecks and refine the recovery process. This ensures the plan is not just a document but a tested and reliable strategy.

Geographic Redundancy and Multi-Region Deployments

Deploying applications across multiple geographic regions significantly enhances resilience against regional outages or disasters. This approach, known as geographic redundancy, ensures that even if one region experiences a disruption, the application remains accessible from other regions. Multi-region deployments often involve replicating data and applications across different availability zones or regions. This provides geographic diversity and protects against localized failures. For instance, a company might deploy its application in multiple AWS regions (e.g., US-East-1 and US-West-2), ensuring continuous operation even if one region suffers an outage. The cost implications of multi-region deployments need careful consideration, as managing multiple environments increases operational complexity and expense. However, the enhanced availability and disaster recovery capabilities often outweigh the added costs for mission-critical applications.

Monitoring and Performance Optimization of Cloud Server Infrastructure

Effective monitoring and performance optimization are crucial for ensuring the reliability, scalability, and cost-efficiency of cloud server infrastructure. Proactive monitoring allows for early identification of potential issues, preventing performance degradation and minimizing downtime. Optimization strategies, on the other hand, help maximize resource utilization and enhance application responsiveness.

Monitoring and optimization are intertwined processes. Data gathered through monitoring informs optimization strategies, leading to a continuous improvement cycle. This iterative approach is vital for maintaining a high-performing and cost-effective cloud environment.

Methods for Monitoring Cloud Server Infrastructure Performance

Monitoring cloud server infrastructure performance involves utilizing a variety of tools and techniques to collect and analyze data on various aspects of the system. This data provides valuable insights into the health and efficiency of the infrastructure, enabling proactive problem-solving and performance optimization.

Several methods exist for gathering this critical performance data. These methods can be broadly categorized into agent-based and agentless monitoring, each with its own strengths and weaknesses.

- Agent-based monitoring: This approach involves installing software agents on individual servers or virtual machines. These agents collect performance data locally and transmit it to a central monitoring system. Agent-based monitoring offers detailed, granular data but requires the installation and management of agents, adding some complexity.

- Agentless monitoring: This method relies on accessing performance data remotely, often through APIs provided by the cloud provider. It eliminates the need for agent installation, simplifying deployment and maintenance. However, the data collected might be less granular than agent-based monitoring.

- Cloud Provider Monitoring Tools: Most cloud providers offer built-in monitoring tools and dashboards. These tools provide a centralized view of the infrastructure’s performance, often integrating seamlessly with other cloud services. Examples include Amazon CloudWatch, Microsoft Azure Monitor, and Google Cloud Monitoring.

- Third-Party Monitoring Tools: Numerous third-party monitoring tools provide comprehensive monitoring capabilities, often offering advanced features like anomaly detection and automated alerting. These tools can integrate with various cloud providers and offer customizable dashboards and reporting.

Key Performance Indicators (KPIs) to Track

Tracking relevant KPIs provides a quantifiable measure of cloud server infrastructure performance. By regularly monitoring these metrics, administrators can identify bottlenecks, optimize resource allocation, and ensure application responsiveness.

The selection of KPIs depends on the specific application and infrastructure. However, some common KPIs include:

| KPI | Description | Significance |

|---|---|---|

| CPU Utilization | Percentage of CPU capacity being used. | High CPU utilization can indicate a need for more powerful instances or application optimization. |

| Memory Utilization | Percentage of RAM being used. | High memory utilization can lead to performance degradation and application crashes. |

| Disk I/O | Rate of data read and write operations on disk. | High disk I/O can indicate slow storage performance, potentially requiring faster storage solutions. |

| Network Latency | Time delay in data transmission over the network. | High latency can negatively impact application responsiveness and user experience. |

| Application Response Time | Time taken for an application to respond to a request. | Slow response times indicate performance issues that need addressing. |

| Error Rate | Frequency of errors occurring within the application or infrastructure. | High error rates signal potential problems requiring investigation. |

Strategies for Optimizing Cloud Server Performance

Optimizing cloud server performance involves a multifaceted approach, encompassing both infrastructure and application-level adjustments. The goal is to enhance application responsiveness, reduce latency, and improve resource utilization.

Several strategies can be employed to achieve these objectives. These strategies often involve a combination of technical adjustments and operational best practices.

- Right-sizing instances: Selecting appropriately sized virtual machines based on application requirements avoids over-provisioning or under-provisioning resources.

- Caching: Implementing caching mechanisms reduces the load on databases and other backend services, improving application response time. Examples include using CDN for static content and Redis for session data.

- Load balancing: Distributing traffic across multiple servers prevents any single server from becoming overloaded, ensuring high availability and consistent performance.

- Content Delivery Networks (CDNs): Utilizing CDNs brings content closer to users geographically, reducing latency and improving download speeds.

- Database optimization: Optimizing database queries, indexing, and schema design can significantly improve database performance and application responsiveness.

- Code optimization: Identifying and resolving performance bottlenecks in application code can dramatically improve application speed and efficiency.

Cloud Server Infrastructure for Different Industries

Cloud server infrastructure has become a cornerstone of modern business operations, adapting its capabilities to meet the unique demands of various industries. The flexibility and scalability of cloud solutions allow organizations across diverse sectors to optimize their IT resources, improve efficiency, and drive innovation. This section explores how different industries leverage cloud server infrastructure, highlighting specific solutions and contrasting their infrastructure needs.

Cloud Server Infrastructure in Healthcare

The healthcare industry faces stringent regulations regarding data privacy and security (HIPAA compliance in the US, for example), demanding robust and secure cloud infrastructure. Cloud solutions enable secure storage and processing of sensitive patient data, facilitating telehealth services, electronic health records (EHR) management, and advanced medical research. Examples include the use of cloud-based platforms for storing and analyzing medical images, facilitating remote patient monitoring, and powering AI-driven diagnostic tools. These solutions often prioritize data encryption, access control, and audit trails to ensure compliance and patient privacy. High availability and disaster recovery are also critical considerations, given the life-critical nature of healthcare data and applications.

Cloud Server Infrastructure in Finance

The financial services industry relies heavily on secure and reliable infrastructure to handle sensitive financial transactions and data. Cloud solutions provide the scalability and security needed to manage large datasets, process high volumes of transactions, and comply with strict regulatory requirements. Examples include using cloud-based platforms for fraud detection, risk management, and high-frequency trading. These applications demand low latency, high throughput, and robust security measures to protect sensitive financial data from unauthorized access and cyber threats. Compliance with regulations such as PCI DSS is paramount, requiring rigorous security controls and auditing capabilities. Financial institutions often employ hybrid cloud strategies, combining public cloud services with on-premise infrastructure to balance security and flexibility.

Cloud Server Infrastructure in Retail

The retail industry utilizes cloud server infrastructure to manage inventory, process online orders, personalize customer experiences, and analyze sales data. Cloud-based solutions enable retailers to scale their infrastructure to meet peak demands during promotional periods or holiday seasons. Examples include using cloud-based e-commerce platforms, implementing personalized recommendation engines, and leveraging big data analytics to understand customer behavior and optimize marketing campaigns. Retailers often utilize cloud services for disaster recovery and business continuity planning, ensuring uninterrupted operations during outages or unforeseen events. Scalability and cost-efficiency are key considerations, as retail businesses experience fluctuating demand throughout the year.

Comparing Infrastructure Requirements Across Industries

While all industries benefit from the scalability and cost-effectiveness of cloud infrastructure, their specific requirements vary significantly. Healthcare prioritizes data security and compliance, finance emphasizes low latency and high security, and retail focuses on scalability and cost-efficiency. These differing priorities influence the choice of cloud deployment models (public, private, hybrid), security protocols, and service level agreements (SLAs). The choice of cloud provider and specific services will also vary based on these industry-specific needs and regulatory compliance requirements. For instance, a healthcare provider might choose a cloud provider with strong HIPAA compliance certifications, while a financial institution might opt for a provider with robust security features and compliance with regulations like PCI DSS.

Emerging Trends in Cloud Server Infrastructure

The cloud computing landscape is in constant evolution, driven by the ever-increasing demands for scalability, efficiency, and cost-effectiveness. Several emerging trends are reshaping how businesses design, deploy, and manage their cloud server infrastructure, promising both significant advantages and new challenges. This section will explore some of the most impactful of these trends.

Serverless computing and edge computing represent two particularly significant shifts in the paradigm of cloud infrastructure. These approaches offer unique benefits but also introduce complexities that organizations must address for successful implementation.

Serverless Computing

Serverless computing represents a paradigm shift from managing servers to focusing solely on code execution. Instead of provisioning and managing virtual machines or containers, developers deploy their code as functions, which are automatically triggered and scaled based on demand. This eliminates the need for server management, reducing operational overhead and allowing for greater scalability and cost efficiency. Amazon Web Services’ Lambda, Google Cloud Functions, and Microsoft Azure Functions are prominent examples of serverless platforms. The impact on businesses includes faster development cycles, reduced operational costs, and improved scalability for event-driven applications. However, challenges include debugging complexities, vendor lock-in, and potential cold starts that can lead to latency issues. Careful consideration of these factors is crucial for successful serverless adoption.

Edge Computing

Edge computing brings computation and data storage closer to the source of data generation, reducing latency and bandwidth requirements. Instead of relying solely on centralized cloud data centers, processing occurs at the network edge, often within devices or gateways. This approach is particularly beneficial for applications requiring real-time processing, such as IoT devices, autonomous vehicles, and augmented reality experiences. The impact on businesses includes improved application responsiveness, reduced bandwidth costs, and enhanced data security. However, challenges include managing distributed infrastructure, ensuring data consistency across multiple edge locations, and dealing with limited resources at the edge. Careful planning and investment in edge infrastructure management are crucial for effective deployment.

Artificial Intelligence (AI) and Machine Learning (ML) Integration in Cloud Infrastructure

The integration of AI and ML is fundamentally altering cloud infrastructure management. AI-powered tools are increasingly used for tasks such as predictive scaling, automated security threat detection, and performance optimization. This leads to more efficient resource utilization, improved security posture, and enhanced operational efficiency. For example, AI can predict future resource needs based on historical usage patterns, proactively scaling resources to avoid performance bottlenecks. However, challenges include the need for skilled personnel to manage and maintain AI/ML systems, ensuring data privacy and security, and the potential for biased algorithms.

Increased Focus on Sustainability in Cloud Infrastructure

Growing environmental concerns are driving a shift towards more sustainable cloud infrastructure. Cloud providers are increasingly focusing on reducing their carbon footprint through initiatives such as using renewable energy sources, optimizing data center efficiency, and implementing carbon offset programs. Businesses are also adopting sustainable practices, such as optimizing their applications for energy efficiency and selecting cloud providers with strong sustainability commitments. For example, Google Cloud’s commitment to carbon neutrality and Microsoft’s efforts to power its data centers with renewable energy are notable examples of industry efforts. The challenges lie in accurately measuring and reporting carbon emissions across the entire cloud infrastructure lifecycle, and balancing the need for sustainable practices with the demands for high performance and scalability.

Choosing a Cloud Provider

Selecting the right cloud provider is crucial for the success of any cloud-based application. The decision hinges on a careful evaluation of various factors specific to your needs and priorities. This section compares major providers and Artikels key considerations for making an informed choice.

Comparison of Major Cloud Providers

Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) are the leading cloud providers, each offering a vast array of services. However, their strengths and weaknesses differ, making a direct comparison essential.

AWS boasts the largest market share and the most mature ecosystem, providing a wide range of services and a large community of developers. Azure excels in hybrid cloud solutions and strong integration with Microsoft products. GCP stands out with its powerful data analytics and machine learning capabilities, often favored by organizations heavily invested in big data. Each provider offers comparable core services such as compute, storage, and networking, but their specific features, pricing models, and support systems vary significantly. For example, AWS’s S3 is a highly popular and mature object storage solution, while Azure’s Blob Storage and GCP’s Cloud Storage offer similar functionalities with distinct pricing structures and performance characteristics. The choice often comes down to specific application requirements and existing infrastructure.

Factors to Consider When Selecting a Cloud Provider

Several critical factors influence the selection of a cloud provider. These include:

- Service Offerings: Does the provider offer the specific services required by your application (e.g., compute instances, databases, machine learning)? Consider the level of maturity and support for each service.

- Pricing Model: Cloud providers employ various pricing models (pay-as-you-go, reserved instances, spot instances). Analyze the cost implications of each model based on your projected usage and scale.

- Geographic Location and Data Sovereignty: Data residency requirements and latency considerations are crucial. Choose a provider with data centers in the desired geographic regions to comply with regulations and ensure optimal performance.

- Security and Compliance: Evaluate the security features and compliance certifications (e.g., ISO 27001, SOC 2) offered by each provider to ensure the protection of your data and compliance with industry regulations.

- Scalability and Elasticity: The ability to easily scale resources up or down based on demand is critical. Assess the scalability and elasticity features offered by each provider to meet your application’s performance requirements.

- Support and Documentation: The quality of support and documentation is essential, particularly during initial setup and troubleshooting. Consider the availability of 24/7 support and the comprehensiveness of the provider’s documentation.

- Integration with Existing Systems: If your application relies on existing on-premises infrastructure or other cloud services, assess the ease of integration with the chosen cloud provider.

Decision Matrix for Evaluating Cloud Providers

A decision matrix can help systematically compare different cloud providers based on key criteria. The following table provides a framework for this evaluation. Remember to assign weights to each criterion based on their relative importance to your specific application.

| Criterion | AWS | Azure | GCP | Weight |

|---|---|---|---|---|

| Compute Services | High | High | High | 0.2 |

| Storage Services | High | High | High | 0.15 |

| Database Services | High | High | High | 0.15 |

| Networking Services | High | High | High | 0.1 |

| Security Features | High | High | High | 0.15 |

| Pricing | Medium | Medium | Medium | 0.1 |

| Support | High | High | High | 0.15 |

FAQ Summary

What is the difference between public, private, and hybrid cloud?

Public clouds are shared resources, offering scalability and cost-effectiveness. Private clouds are dedicated to a single organization, providing enhanced security and control. Hybrid clouds combine public and private resources, offering flexibility and customization.

How can I choose the right cloud provider?

Consider factors like pricing models, service level agreements (SLAs), geographic location of data centers, compliance certifications, and the provider’s expertise in your specific industry.

What are the key performance indicators (KPIs) for cloud server monitoring?

Key KPIs include CPU utilization, memory usage, disk I/O, network latency, and application response times. Monitoring these helps identify performance bottlenecks and optimize resource allocation.

What are the common security threats in cloud environments?

Common threats include data breaches, denial-of-service attacks, malware infections, misconfigurations, and insider threats. Robust security practices, including access control, data encryption, and regular security audits, are crucial.